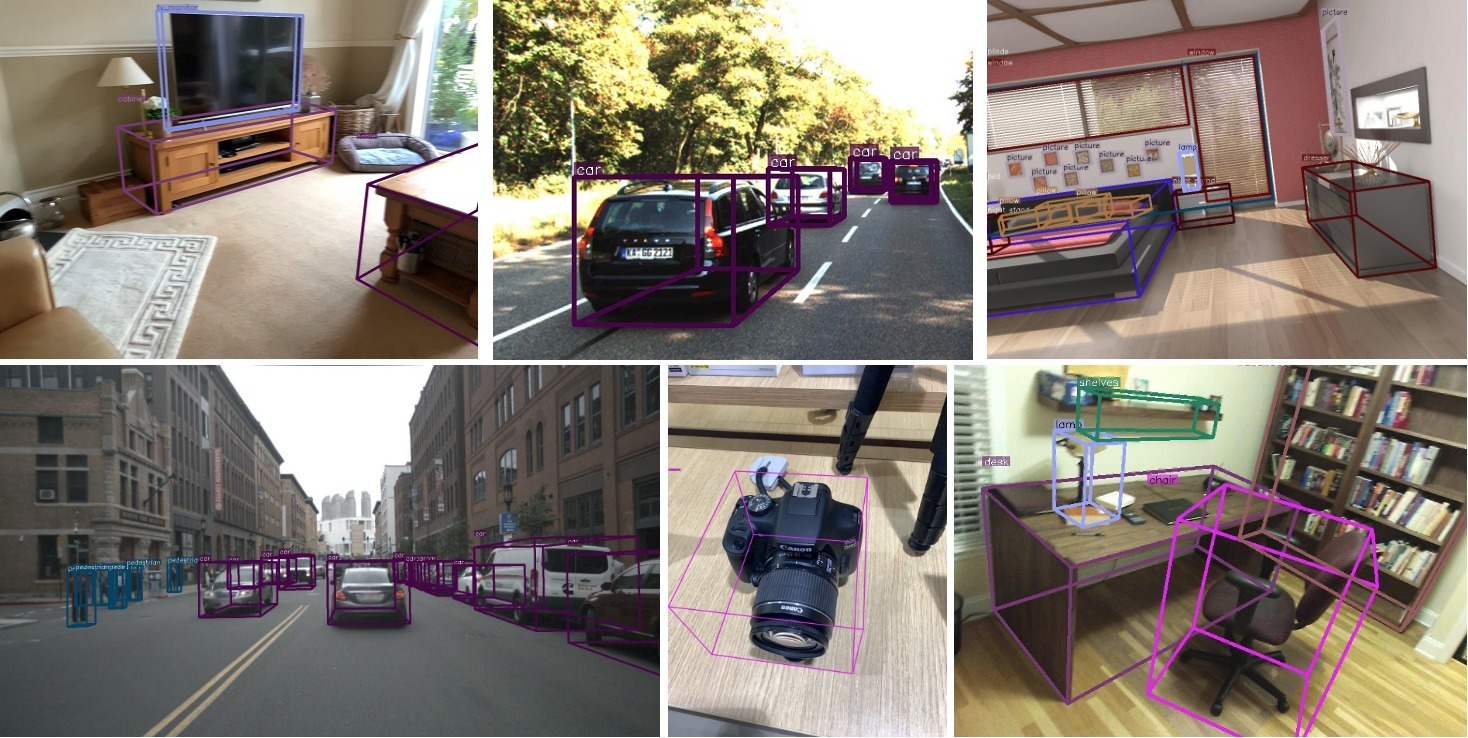

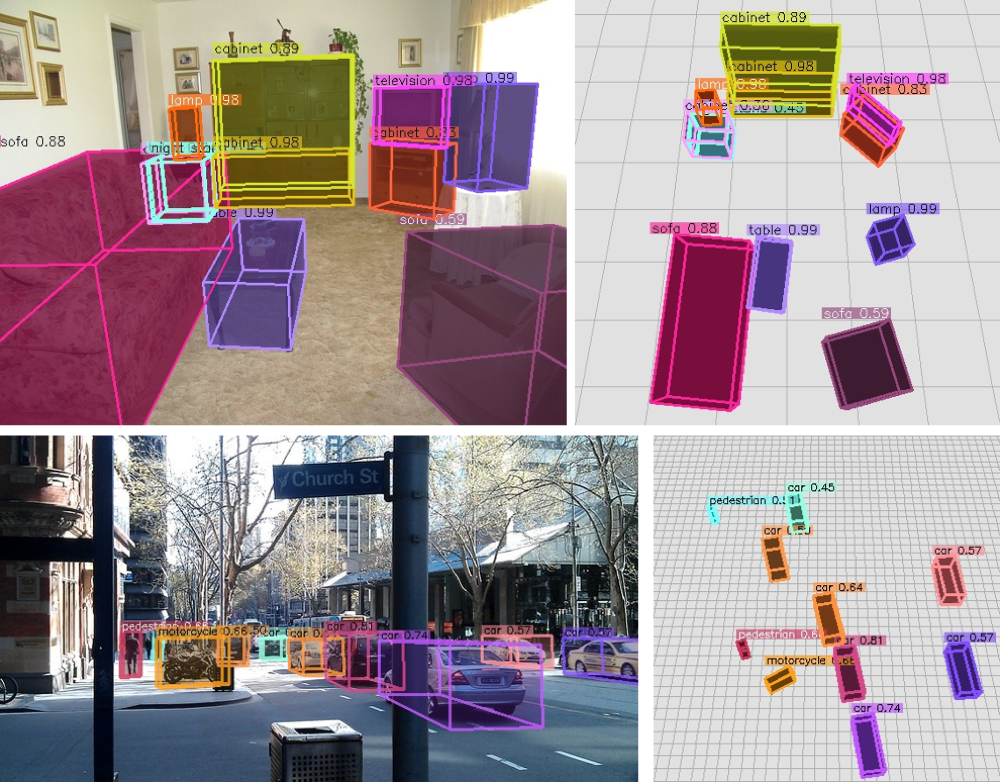

Cube R-CNN Overview

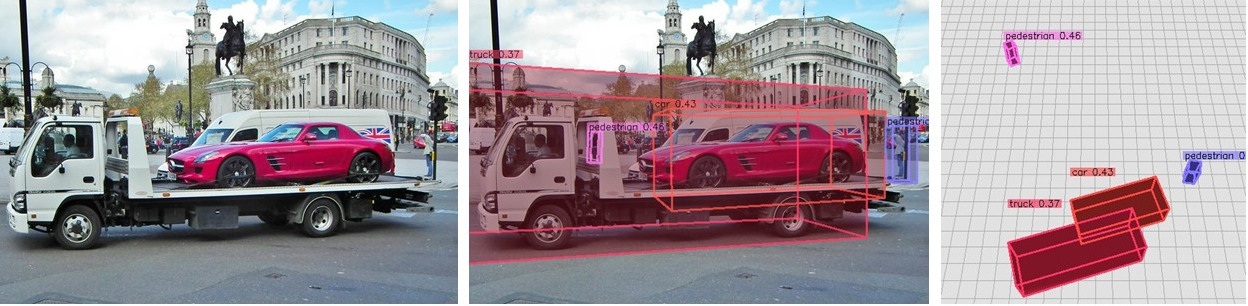

We design a simple yet effective model for general 3D object detection which leverages many key advances from the monocular object detection techniques of recent years. At its core, our method build on Faster R-CNN (detectron2) to parameterize a 3D head in order to estimate a virtual 3D cuboid, which is then compared to 3D GT vertices.

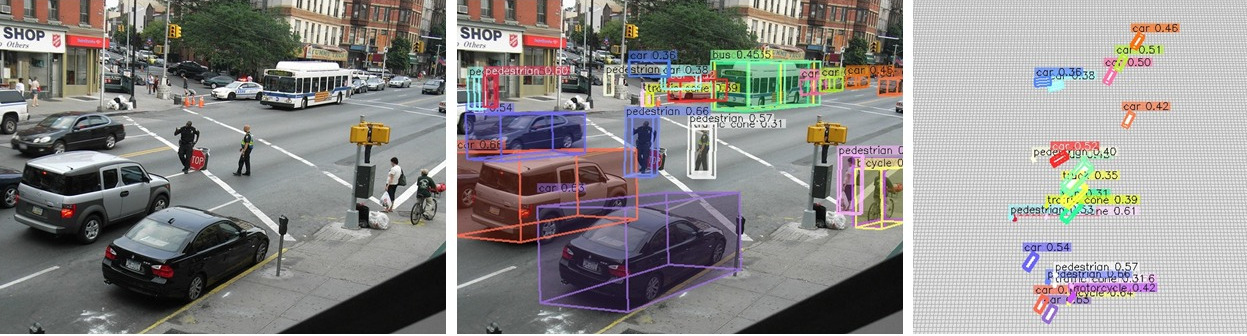

Virtual Depth Visualization

An important feature of Cube R-CNN is its use of a virtual camera space to make predictions in, which maintains effective image resolution and focal length across diverse camera sensors. For example, consider the case where two camera sensors (a) and (b) above can produce very similar images despite the metric depth being nearly twice as far away for camera in (b). We show in experiments that addressing the ambiguity of varying camera sensors is critical for scaling to large/diverse 3D object datasets.